Transforming Access to

Vedic Knowledge with Generative AI

Advanced AI and AWS Infrastructure Deliver Deep,

Accurate Insights on Vedic Literature

Empowering Vedic Knowledge with Advanced AI and AWS Tools

Overview

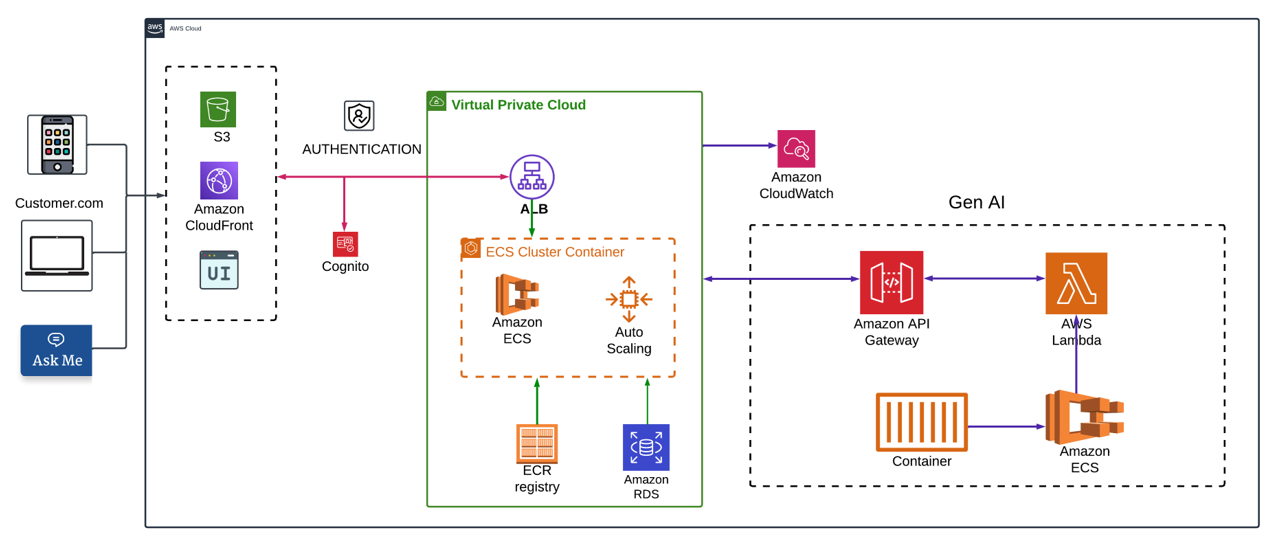

The Svarupa AI Chatbot was developed as an intelligent question-and-answer system for the Svarupa application, designed specifically to address inquiries about Vedic scriptures. This chatbot leverages GenAI and Large Language Models (LLMs) to deliver nuanced, accurate, and insightful responses to a wide range of questions related to Vedic literature. Acting as both an educational tool and conversational assistant, the Svarupa AI Chatbot enables users to access detailed explanations, definitions, and historical insights with ease. Its architecture, supported by AWS cloud services and AI methodologies, ensures high performance, scalability, and adaptability to handle complex and domain-specific queries effectively.

Situation

Svarupa application’s knowledge base contains extensive structured and unstructured data, which required a system capable of managing dynamic user queries with accuracy, contextual relevance, and responsiveness. This dataset includes unstructured documents (PDFs, Word files) and structured content in OpenSearch indexes like brahmanas_header, brahmanas_summaries, and term_details, each offering unique insights on Vedic mantras, term morphology, padapatha, and other related details.

To achieve a high level of response accuracy and relevance, the team adopted Retrieval-Augmented Generation (RAG) and function calling methods to augment user queries with content from internal and external data sources. This approach demanded a sophisticated AWS infrastructure to ensure secure, scalable operations while balancing the needs of growing user demands.

Task

- Create a Conversational GenAI Chatbot: Build a conversational AI that harnesses GenAI capabilities for deep, contextual understanding of Vedic literature.

- Integrate Domain-Specific Knowledge: Equip GenAI to handle various types of Vedic-related queries including information on padapatha, devata, and interpretations (adibhautic, adhyatmic, and adidaivic), statistical and factual data about the Svarupa knowledge base.

- Harness AWS and DevOps for Stability and Growth: Ensure that the chatbot’s architecture is scalable, reliable, and secure, leveraging AWS services and DevOps practices to maintain high performance and adaptability.

Action

GenAI-Powered Chatbot Implementation

- Data Preprocessing: The team processed unstructured documents with OCR (AWS-Tesseract) and metadata extraction to create a structured dataset from PDFs, Word files, and Svarupa’s database. Metadata such as author, source, and title were extracted to provide context-rich responses.

- Veda Content and Term Details: The processed text is in stored in AWS RDS and OpenSearch Indexes. This structured data enabled the chatbot to respond accurately to both general and in-depth queries.

- Retrieval-Augmented Generation (RAG):

- Retrieval-Augmented Generation (RAG):

- RAG allowed GenAI to retrieve and integrate relevant information dynamically before generating a response. This technique ensured that the responses were accurate and contextually enriched.

- Created high-dimensional vector embeddings of Vedic content using OpenAI embedding models such as text-embedding-ada-002, and others suitable for specific language and context nuances.

- Dynamic Chunking Strategies: Multiple chunking methods were tested, including fixed-size, semantic, and recursive chunking, to optimize the retrieval of the most relevant data segments based on the complexity and type of user query.

- Indexing with OpenSearch: Documents were indexed in OpenSearch Vector Database to support rapid retrieval. OpenSearch Vector Database, chosen for its hybrid search capabilities and faster response times, provided free indexing for smaller datasets while supporting growth as the indexed document count increases.

- Advanced Function Calling with GenAI: To enhance the LLM’s ability to respond to specific requests, Function Calling was integrated with OpenAI’s capabilities, allowing GenAI to execute specialized tasks efficiently.

- Function Types: A suite of custom functions—such as get_vedamantra_details, get_pada_meaning, get_pada_morphology, and get_audios_by_query—were developed to provide users with targeted responses based on their unique queries.

- LangChain Orchestration: LangChain’s StructuredTool and LangGraph components allowed GenAI to manage function calling with precision, thus delivering structured responses that matched user intent.

- Text-Opensearch Agent: We developed a novel agent called “text-openserch” agent, which helps to assist the users to get most accurate answers from Svarupa Knowledge base.

AWS and DevOps Integration for Seamless Scalability

- Serverless Lambda Deployment: GenAI was deployed on AWS Lambda to utilize a scalable, serverless environment for handling user queries.

- Lambda with FastAPI and Mangum: The FastAPI application was integrated with AWS Lambda using Mangum, making it serverless and allowing it to handle increasing user demands without dedicated servers.

- ECR and Dockerized Infrastructure: The application was containerized with Docker, stored on AWS Elastic Container Registry (ECR), and deployed via AWS Lambda, ensuring flexible scaling and resource-efficient operation

- Continuous Integration/Continuous Deployment (CI/CD) Pipelines: CI/CD was set up using AWS CodePipeline and CodeDeploy to streamline application updates and maintain GenAI’s high uptime.

- Automated Testing and Deployment: Regular updates and tests were automated, minimizing downtime and ensuring GenAI remains updated and responsive to user demands.

- Real-Time Monitoring, Security, and Performance Optimization:

- AWS CloudWatch: Enabled real-time monitoring of key metrics such as response latency, system health, and error rates, allowing proactive performance adjustments.

- IAM Roles and KMS: Ensured secure access control with IAM roles and data encryption via AWS Key Management Service (KMS), protecting sensitive data and user interactions.

- Orchestration and Workflow Management with Advanced AI Techniques

AWS and DevOps Integration for Seamless Scalability

- LangChain and LangGraph: LangChain provided the framework for managing complex multi-step workflows, enabling seamless integration with the GenAI functions. LangGraph enhanced LangChain’s capabilities by allowing the system to handle stateful, multi-step queries effectively.

- Advanced RAG Techniques: By implementing corrective and adaptive RAG (self-reflective and multi-step retrieval), GenAI improved the relevance and accuracy of responses to complex user queries, ensuring a higher quality of engagement.

Results

The AWS and DevOps integration, combined with GenAI’s LLM-driven capabilities, produced impressive results for the chatbot platform:

- Enhanced User Engagement and Satisfaction: With refined indexing, chunking, and function calling strategies, GenAI provided faster, more accurate, and contextually relevant responses, which led to higher user engagement and satisfaction scores.

- Seamless Scalability: AWS Lambda’s serverless architecture allowed GenAI to dynamically scale according to demand, reducing operating costs and maintaining response times even with increasing data volume and user load.

- Optimized Operational Efficiency: CI/CD pipelines enabled automated deployment and updates, while AWS CloudWatch provided valuable insights that helped improve performance and minimize downtime.

- High Security Standards: Leveraging IAM roles, encryption via AWS KMS, and secure data handling practices, GenAI achieved robust data protection, aligning with best practices for sensitive data management.

Conclusion

GenAI has established itself as a pioneering platform in the realm of Vedic knowledge dissemination, combining conversational AI capabilities with scalable AWS infrastructure and efficient DevOps practices. By leveraging LLM advancements, Retrieval-Augmented Generation, and Function Calling, GenAI ensures accurate, user-centric responses that provide meaningful insights into Vedic topics. The architecture is flexible, enabling expansion to multi-language support, integration of open-source LLMs, and further enhancements in retrieval and orchestration methods.

The success of GenAI Chatbot showcases how a well-architected GenAI solution, underpinned by robust AWS services and DevOps tools, can transform complex data into accessible, valuable, and engaging user experiences in the domain of cultural and spiritual knowledge.

Company

Partners

Contact

India

Plot No. 1-4 & 4A, 3rd Floor,

KRB Towers, Jubilee Enclave,

Whitefields, Hitech City, Hyderabad,

Telangana – 500081, India.

+91 99088 47600

Copyright © Soulax. All Rights Reserved.