ML-Powered Transcription for Vedic Knowledge

Overview

Svarupa, a nonprofit digital initiative, aims to preserve and disseminate ancient Vedic knowledge by transcribing Sanskrit chants into text with precise word-level timestamps. The platform caters to researchers and spiritual enthusiasts by providing highly accurate transcriptions of long-form chanting, which is essential for in-depth study and analysis. A robust ML pipeline was required to manage the transcription challenges associated with Vedic chanting.

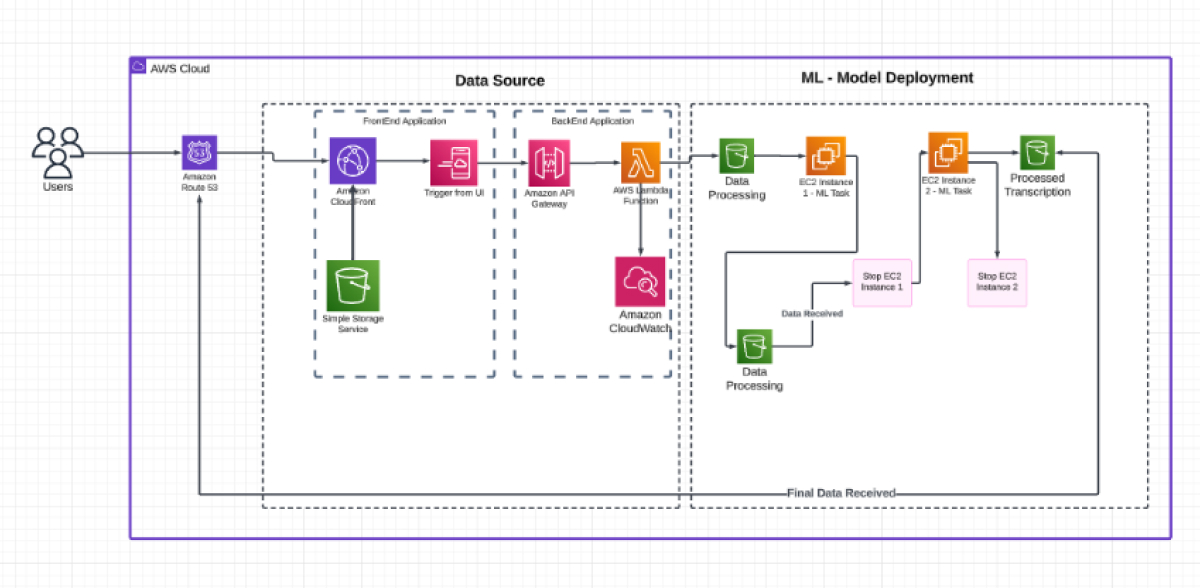

ML Architecture

Situation

Svarupa is pioneering the transcription of long Sanskrit mantra chanting audio files (often exceeding 30 minutes) into accurately timestamped text. This challenging task requires splitting lengthy audios, slowing them down for improved recognition accuracy, and managing the complexities of Sanskrit language nuances.

Task

Key challenges include

- Handling Long Audio Files

Vedic chants are recorded in extended sessions, creating large audio files that must be split into manageable segments. - Optimizing Speech Recognition for Chanting

The chanting speed often exceeds normal speech rates, impacting transcription quality. - High Processing Demand

The volume and duration of files require efficient processing and high scalability. - Accurate Timestamps and Repetition Handling

Accurate word level timestamps and elimination of repetitive or erroneous transcriptions are essential to provide meaningful and clean output.

Action

To meet these challenges, an ML-centric architecture was implemented, focusing on

- Audio Segmentation and Pre-processing

- A silence detection algorithm segments long audio files, creating manageable audio clips.

- Audio speed normalization aligns the speech rate with the model’s training data, enhancing transcription quality.

- Model Selection and Implementation

- Whisper Large V2 model was chosen for its accuracy in handling multilingual audio inputs. The model processes each audio clip, ensuring comprehensive transcription with high accuracy.

- Dynamic Time Warping (DTW) was integrated to refine word-level timestamps and address errors due to repetition or noise in the input.

- User Interface (UI)

Allows users to upload audio files, which then triggers the transcription pipeline. - AWS Lambda Trigger

Starts the transcription process by activating when an audio file is uploaded to Amazon S3.

- Fetches the uploaded audio from S3, applies silence removal, slows down the audio for transcription accuracy, splits it into 1minute clips, and reuploads the processed clips to S3.

- Retrieves each audio clip from S3, performs transcription using the Whisper Large V2 model, and applies Dynamic Time Warping (DTW) for precise word level-based timestamps obtained. Postprocessing then removes duplicate or erroneous text segments.

- SQS Queue Management: AWS Simple Queue Service (SQS) manages transcription tasks to avoid concurrent requests that could lead to resource conflicts. This ensures smooth, sequential processing without interruption.

- Error Handling and Robustness

Implemented error checking mechanisms to handle common issues such as

- Incorrect or missing audio URLs.

- EC2 startup failures or connectivity issues.

- Handling special characters in audio filenames.

- A queuing mechanism prevents parallel transcription requests that could cause EC2 instances to shut down prematurely.

- Data Handling and Security

- Ensuring secure handling of sensitive audio data is critical. Data is stored with encryption protocols in Amazon S3, and role-based access through AWS Identity and Access Management (IAM) ensures security throughout the workflow.

- Scalability and Performance

- Resource Allocation: Dynamic resource management allowed for scaling ML model instances up or down based on demand, ensuring efficient resource usage.

- Processing Speed: The optimized architecture reduced processing time, with a 45-minute audio clip processed in approximately 4 minutes per instance.

- Accuracy Improvements: The use of pre-processing and DTW significantly enhanced the transcription accuracy, ensuring better quality outputs.

Results

This ML-driven transformation empowered Svarupa to achieve the following:

- Reduced Processing Time: Audio files are processed much faster with the high-efficiency ML pipeline.

- Improved Accuracy: Enhanced algorithms led to precise word-level timestamps and reduced errors.

- Global Accessibility: Users now have access to high-quality transcriptions, supporting both academic and personal study of Vedic chants.

Conclusion

With Soulax’s guidance, Svarupa successfully transformed its transcription process into a scalable, automated pipeline capable of handling complex Sanskrit chants. This new infrastructure has empowered Svarupa to fulfill its mission of preserving Vedic heritage, making these valuable texts accessible to a global audience through a modern, efficient, and user-friendly platform.